Twitter makes it harder to read tweets

Friday, December 18, 2020

Comments: 4 (latest 23 hours later)

Tagged: twitter, curl, web services, apis

Yesterday I touched off a teacup-storm when I tweeted:

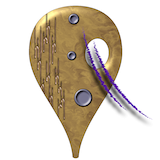

Twitter cut off the ability to read a tweet by fetching its URL with a normal HTTP GET. You need Javascript or an authenticated API call.

I know most people don't care. It's normal web browsing, with all the data-hoovering we've come to expect.

Just want to say that it matters.

Quite a few people started discussing this, and it wound up on this HackerNews thread.

What's going on here?

The problem here might not be clear. After all, if you click my tweet link in a regular web browser, you'll see the tweet and its replies, right?

|

| "Twitter cut off the ability to read a tweet by fetching its URL with a normal HTTP GET. You need Javascript or an authenticated API call." |

That's because a regular web browser supports Javascript, cookies, and everything else that's been thrown into the Web over the years. When you browse like this, Twitter can cookie-track you (notice that I'm using Safari's Private Browsing feature). They can nag you to log in. They can show you ads. All the usual stuff.

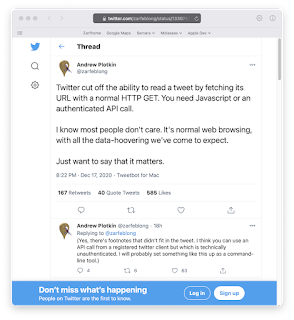

If you try turning off Javascript, you see this:

|

| "JavaScript is not available." |

But let's reduce the situation to bare essentials: a raw HTTP GET request using curl.

curl -v https://twitter.example/zarfeblong/status/1339742840142872577

HTTP/2 400

This browser is no longer supported.

Please switch to a supported browser to continue using twitter.com. You can see a list of supported browsers in our Help Center.

I've elided most of the headers, but that's an HTTP "400: Bad Request" error response.

In the past, all of these requests would work; you could read a tweet this way. Twitter added warnings a while back ("Do you want the mobile site?") but you could get through if you wanted.

However, a few weeks ago, Twitter added a warning saying that on Dec 15th, they would remove support for legacy browsers. That's what changed this week. I didn't catch the exact time, but on Dec 15th, they started returning this error page which includes no information about the tweet.

What problems does this cause?

I admit up front that my browsing setup is weird. My default browser has JS turned off; it has a strict cookie expiration limit. This is hardcore privacy mode and most people don't do it. Lots of web pages work badly for me. But most of the web works at least a little bit -- when I go to a web page, I can usually see what's there.

(Of course, if I want to buy something or edit a Google doc, I need to switch modes. I use four different browsers and about six or seven different profiles with different levels of privacy protection. I told you it was weird.)

The point is, I am willing to trade off convenience for privacy. But as of Dec 15th, when I go to a Twitter URL, I see nothing. The tradeoff just got a little worse.

This isn't about me, though. From followup discussion:

- My friend Jmac is working on a tool which displays chat from several sources, including Twitter, in a single window. Twitter broke it.

- Keybase is an open platform which supports personal end-to-end encryption. It uses Twitter as one (of several) means of identity verification. Twitter broke that.

- Someone in the HN thread mentioned a script they use to check for broken links on their web site. Twitter broke that (for links to tweets).

- I've heard that previews of tweets on other chat/discussion platforms has also broken. (Not sure which ones, though.)

To be more ideological about it (yes I will), Twitter is asserting that people reading tweets is not their priority. Twitter is not about people communicating. They'll block communication if it pushes up their corporate numbers.

This was my summary from last night (slightly edited for clarity):

The point of the web is that a public URL refers to information. You can GET it. There may be all sorts of elaboration and metadata and extra services, but that HTTP GET always works.

Tools rely on this. Blogs pull previews via HTTP. RSS readers collect and collate posts. People peek pages using fast or low-bandwidth tools. It's not part of any company's value proposition or stock price; it just works. Until somebody breaks it.

The error message that appears now when I do this on Twitter (and the warning that popped up a few weeks ago) says that Twitter is dropping support for legacy browsers. But you support legacy browsers with an HTTP reply that contains the tweet text. That's all.

The only reason not to do this is that someone at Twitter said, "Why are we letting people just read tweets? That doesn't benefit us. They need The Full Twitter Experience." Which boils down to selling ads.

Yes, I could be using the API in various ways without directly hooking into the ad firehose. But then at least [Twitter] controls the experience -- [they] give out the API keys, [they] can decide to cut people off or otherwise control what's going on.

If I can just read a public tweet the same way every other web document works, then I can make the experience work how I want; I can have the conversation on my own terms and in my own framework.

To Twitter, this is anathema. So Twitter cut it off.

That's all. That's where we are. I don't expect different because Twitter is an ad company. I expect other changes in the future which will make Twitter even less hospitable to me. Maybe next week [or] maybe in ten years -- I'll find out when it happens.

--@zarfeblong, Dec 17th (or on nitter!)

Alternatives and workarounds

Of course there are many.

Twitter's public API

Twitter supports a web API. You can use it from any language you like (Perl, Python, whatever). In fact you can use it with curl or wget if you enjoy web plumbing.

As is usual for big web APIs, you have to register as an app developer and request an access key. This is free, but it puts the process under Twitter's control. In theory they can revoke your access if they think you're a baddie.

Also, the API has rate limits. This isn't a shock; every web service has some kind of rate limit, even if it's just Cloudflare or your ISP cutting you off. But Twitter has made it clear that they limit use of the API to make sure that third-party clients never get too popular. They don't exactly want to squash third-party clients, but they see them as a threat to be contained.

(See this 2012 blog post -- particularly the bit about "if you need a large amount of user tokens". I wrote more about this in 2018, when Twitter deprecated some functionality from their API.)

Nitter.net

Several people referred me to Nitter.net, an alternative Twitter front-end which doesn't require Javascript. Pretty much aimed at people like me! You can substitute Nitter in any public tweet URL and get a URL that works in all the ways we've been talking about. For example, https://nitter.net/zarfeblong/status/1339742840142872577:

curl -v https://nitter.net/zarfeblong/status/1339742840142872577

This downloads an HTML document which contains my original text, and all the replies. You can also visit https://nitter.net/zarfeblong to see my Twitter profile, except there's no ads, no promoted crap, and it loads fine (and fast!) without Javascript.

I'm sure that Nitter uses Twitter's API, mind you. It is, de facto, a third-party Twitter client. Which means that -- again, in theory -- Twitter could just cut it off. I don't know whether they're likely to. At any rate, at present, Nitter is a useful interface.

Faking your User-Agent

Turns out that if you're Google, you can GET tweets all you like! And pretending to be Google is pretty easy.

curl -v -A "Mozilla/5.0 (compatible; Googlebot/2.1; +http://google.com/bot.html)" https://twitter.example/zarfeblong/status/1339742840142872577

This sends Google's User-Agent string (instead of the standard curl string). Twitter happily sends back the same web page I was getting before Dec 15th.

Now, this web page asks me to turn on Javascript. But the text of my tweet is in there (along with people's replies in the thread). You can extract the text by looking for og:description, which is the standard web preview metadata.

Is this a viable workaround? It certainly works. But it's a hack. Twitter's policy is that I should not be allowed to get this page. Only Google can, because Google is king. I'm a peon. Their enforcement of this policy is crude and easy to cheat -- but that's a bug. Maybe they'll fix the bug. Maybe it's not important enough to fix. Again, I don't know.

It's also worth noting that the page you get this way is 586 kb. The Nitter.net equivalent is 28 kb. Twitter has piled a lot of junk into their UI.

So now what?

Honestly I haven't decided. I think I'm going to set up my default browser to redirect twitter.com URLs to nitter.net. That will take care of most of my day-to-day needs.

(I do use Twitter clients, by the way. Tweetbot on Mac, Echofon on iOS. But I try to use them in a mindful way. I don't want them to pop up just because I clicked somewhere.)

Mostly, this is an opportunity to look clearly at Twitter and remember that it does not love us. It's a commercial social network; we are its fuel and in its fire we shall burn. I use Twitter, but -- as with the web in general -- I want to remain aware of the tradeoffs.

For what it's worth, Mastodon never got traction for me. Sorry. My private social circles are now on Slack and Discord. Slack just got bought. Maybe it'll be okay. Discord's heel-turn is still in the unclear future. I don't know. We have to stay flexible.

So, you know... the usual story. Enjoy your holidays. Stay home and videochat. Play some games.